Subject is inspired by on of the most popular TED YouTube videos.

I was recently doing load testing of the new code altogether and found a funny thing:

After certain point lowering delay for items leads to

dramatic degradation of preprocessor manager.

I've added statistics logging to worker threads and found out that they are free most of the time, so this time it looks like it's the preprocessor manager problems.

After reducing items delay to something less then 30 seconds i saw that preprocessor managers drop their processing speeds from around 80 000 items to 500-800 items per 5 seconds.

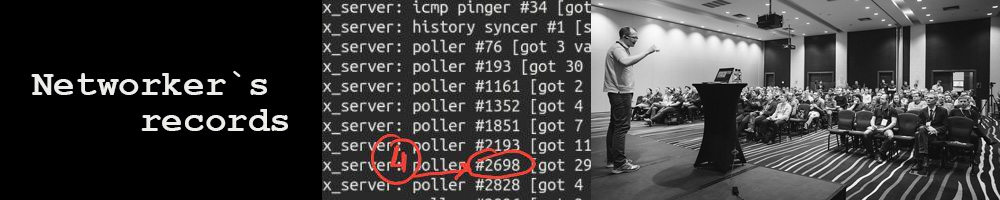

pic: semop 4 shown, but real problem is the semop 1

Perhaps, it's still locking. There aren't that many

semop operations, according to strace, but visually it's the operation where the process freezes most. I couldn't find a simple way to calculate wait time via strace or something similar, so have to rely on my eyes.

(update: RTFM, -w -c options combined produces strace statistics based on real time)

First i've found

prerproc_sync_configuration was guilty, but this was wrong path.

After an hour of research i've found

another bottleneck. And this one is also global architectural - it the

history cache locks.

Situation is almost the same as it was with configuration lock. There is a globally locked access to history storage which leads to "single CPU problem"

Having LOTS of results coming from pollers, threads become limited by a single core speed to put data to the cache and to read it from.

Solution? Well, it nothing new - lets split it to 4 independent caches which is locked separately and access each one by it's sets of threads.

Most of dbcache.c has to be fixed as it's not only the cache that has to be split into 4 parts, but there are lot's of static vars declared.

Not sure if this is something needed for production but a might be a good prototyping experiment.

It is also a question hanging - why performance raises when i split preprocessing manager to 4 workers on Xeon5645 CPUs?

(upd: perhaps the answer is that preprocessor manager has lots of other processing work to do which being split by differnt CPUs give some combined profit)

Whatever.

Just to make sure the job of maximizing NVPS is completed, i fixed dbcache.c and HistoryCache locking to support 4 hsitory caches to see if this is _the last_ bottleneck.

I did what i assume safe locking - rare and non critical operations are still lock the entire cache operations, but most critical ones - only locks it's part of the cache.

Data flush from preprocessor manager to cache, where speed is mostly needed, are done by locking one of four sub caches, and such an additions can go in parallel. Then i also had to fix lots of static vars in dbcache.c as they seem to be used in parralel.

But i have to admit i've failed on this experiment. Spent 3 day's free time, but still was getting very unreliable daemon which keep crashing after 1-2 minutes.

So at the moment i am giving up on this, perhaps later i may revert to this.

pic: four preprocessor managers, doing 118k NVPS combined (note - idle time calculated wrong)

And there is one more reason.

I returned to test machine with E3-1280. And wow!, Zabbix could poll, process and save 118kNVPS (pic) with fixed queueing and 4 preprocessor managers. I would say its 105-110k stable while still having 18-20%idle. And It's

kind a lot. My

initial goal was to reach

50k. Considering we need 10k on one instance and 2k on the other it's enough capacity to grow for a while.